From Replit Hacks to a 24/7 Production ML App: My AWS Deployment Journey

In September 2024 I built a project called "SocialKart," for a hackathon, It was an AI-powered tool that turns an Instagram video post into a ready-to-use e-commerce product listing. The idea was simple: give it an Instagram post URL, and it would extract the media, analyze the frames, transcribe the audio, and use an LLM to generate a full product description, complete with images.

Building the ML pipeline was fun. Deploying it? That was a battle against platform limitations, security triggers, and my own understanding of what "production" really means, So at that time I just showed the demo on my local machine, but now coming back to it, I wanted to showcase it to people without needing to have my laptop with me all the time. This is the story of how I went from cheap hacks to a stable, automated, 24/7 backend on AWS.

The Application: What Does SocialKart Do?

At its core, the backend pipeline is a multi-stage process:

- Media Scraping: It logs into Instagram (this becomes important later) and downloads the video and caption from a given post URL.

- Frame Analysis: The video is broken down frame-by-frame. An ONNX/PyTorch model classifies each frame to find the most relevant ones for an e-commerce listing (e.g., clear product shots).

- Audio Transcription: The audio is extracted from the video and transcribed into text.

- LLM Generation: The original caption, the transcribed text, and other metadata are fed to Google's Gemini LLM, which generates a structured JSON output for a product name, description, key features, and more.

- Final Output: The generated text and the best frames are compiled into a preview that looks like an Amazon product page.

The whole thing is served via a Flask-SocketIO API to provide real-time progress updates to the frontend.

The Deployment Saga: A Series of Unfortunate Events

My goal was simple: get a 24/7 public endpoint for my portfolio. My initial attempts were... not so successful.

Problem 1: The Replit Uptime Hack is Dead

Back in the day, I used a classic trick to host my Discord bots for free. Replit provided a simple server that I'd expose with a Flask app. I'd then use a service like UptimeRobot to ping the Replit URL every 5 minutes, preventing the server from sleeping.

The Reality in 2025: This no longer works. Replit has changed its policies, and you now need to have the Replit tab constantly open for the server to stay alive. This was a dead end for a 24/7 portfolio project.

Problem 2: Azure's "Free" Tier Wasn't Free Enough

My next stop was Azure. They offer credits and a free tier, but the limitations were immediately apparent. The basic virtual machines available couldn't even handle loading PyTorch into memory before crashing. The resource constraints were too tight for any serious ML application. It was time to get serious.

The Solution: A Real Deployment on AWS EC2

I decided to switch to AWS, and this is where I finally found the power and flexibility I needed. But it wasn't a straight path to success. I had to solve a series of real-world deployment problems one by one.

Challenge 1: The Instagram Login Blockade

The very first feature of my app was scraping Instagram. It worked perfectly on my local machine. On EC2, it failed instantly.

The Problem: Instagram's security is smart. It knows the difference between a login from a residential IP address (my home WiFi) and a login from a known data center IP address (an AWS server). Even when I used an EC2 instance in the Mumbai region (close to my location), the login was flagged as suspicious, triggering a Checkpoint required error.

The Solution: The Session File Method I realized I couldn't log in with a password on the server. The fix was to separate the login from the operation:

- Log in locally: Run a Python script on my home computer that logs in and saves the session state to a file (e.g.,

<username>.session). - Upload the session: Use

scp(Secure Copy) to upload this session file to the EC2 instance. - Use the session on EC2: Modify the server code to only use

L.load_session_from_file(). It never attempts to log in with a password, completely bypassing the security check.

This worked! But it created a new problem: these sessions expire.

Challenge 2: Making the App Run 24/7

Just running python3 app.py in an SSH terminal is temporary. The moment I closed my laptop, the server would die.

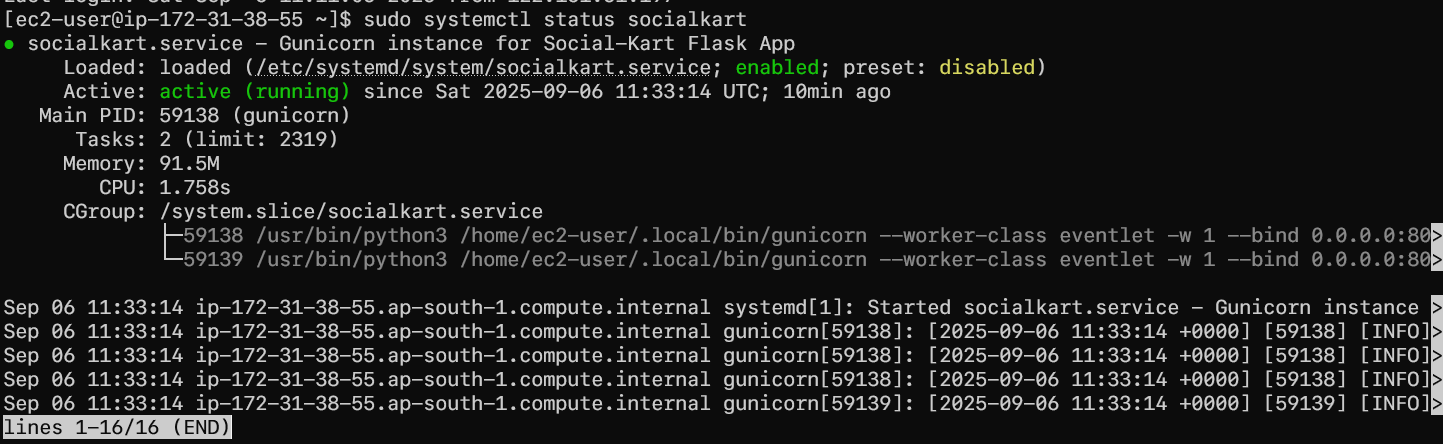

The Solution: systemd

The professional way to run a background process on Linux is to create a service. I created a systemd service file that told the OS how to manage my app.

# /etc/systemd/system/socialkart.service

[Unit]

Description=Gunicorn instance for Social-Kart Flask App

After=network.target

[Service]

User=ec2-user

Group=ec2-user

WorkingDirectory=/home/ec2-user/backend

ExecStart=/home/ec2-user/backend/venv/bin/gunicorn --worker-class eventlet -w 1 --bind 0.0.0.0:8000 app:app

Restart=always

[Install]

WantedBy=multi-user.targetWith these commands, my app was officially a 24/7 service:

sudo systemctl daemon-reload

sudo systemctl enable socialkart

sudo systemctl start socialkart

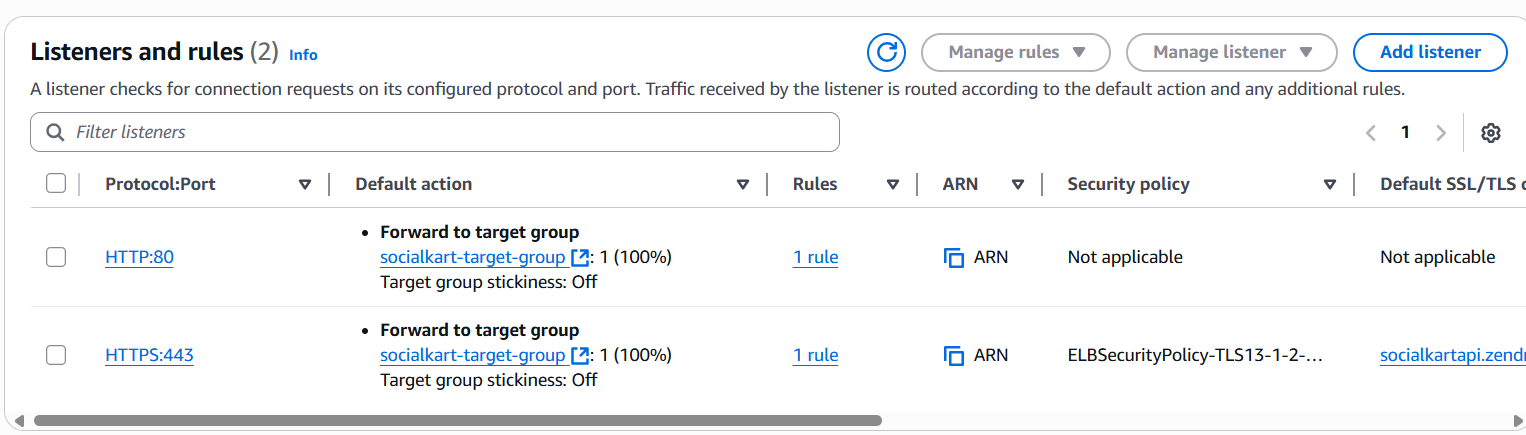

Challenge 3: Getting a Real HTTPS Endpoint

My app was running, but it was trapped inside the EC2 instance on port 8000. I needed a real, secure URL like https://socialkartapi.zendrix.dev. This required a full production setup.

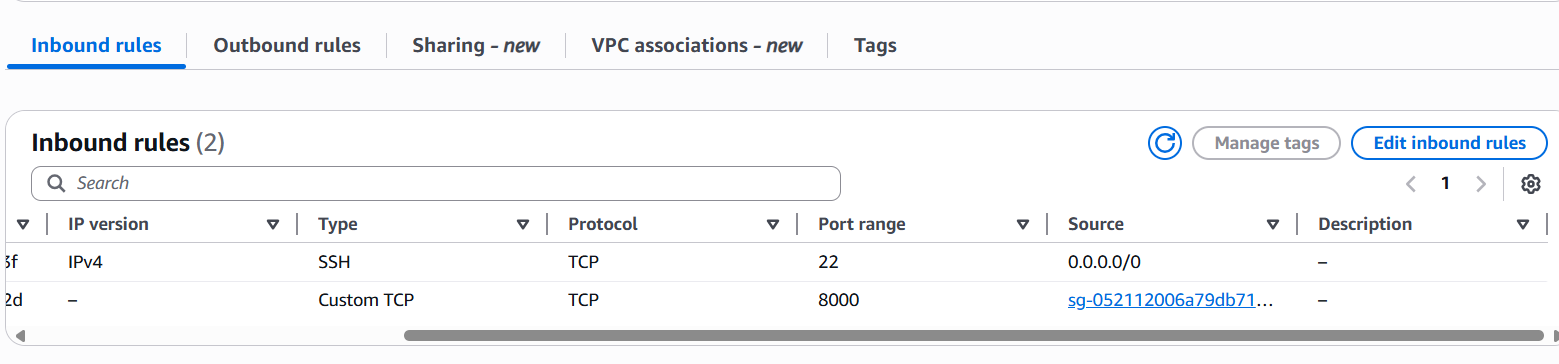

The Solution: The ALB + ACM + DNS Stack This was a multi-step process, and getting the "firewall" rules right was the key.

- ACM (Certificate Manager): I requested a free SSL certificate for

socialkartapi.zendrix.dev. This involved adding a CNAME record to my domain's DNS settings on Name.com to prove ownership. - ALB (Application Load Balancer): I created a Load Balancer to be the public "front door". It was configured to listen on ports 80 (HTTP) and 443 (HTTPS) and forward all traffic to a "Target Group".

- Target Group: This group pointed to my EC2 instance on port 8000. The Health Check was crucial here. It constantly pings my app on

/to make sure it's alive. If the health check fails, the ALB won't send any traffic. - Security Groups (The Firewall): This was the trickiest part. Two firewalls needed to be configured perfectly:

- The Load Balancer's Firewall: Allows traffic from the internet (

0.0.0.0/0) on ports 80 and 443. - The EC2 Instance's Firewall: Allows traffic only from the Load Balancer's security group on port 8000. This protects my app from direct exposure.

- The Load Balancer's Firewall: Allows traffic from the internet (

- Final DNS Connection: The last step was to go to Name.com and create a final CNAME record that pointed

socialkartapito the long DNS name of my new Load Balancer.

With that, https://socialkartapi.zendrix.dev was live!

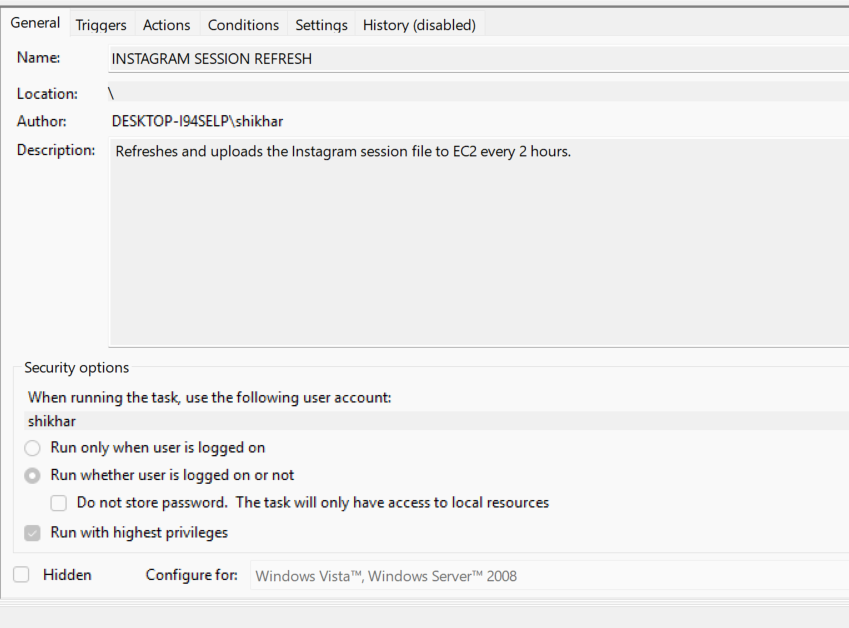

The Final Piece: Full Automation

I had solved the session expiration problem, but it required me to manually run a script and upload the file every few hours. This wasn't good enough. I wanted a true "set it and forget it" solution.

The Solution: A Local Python Script and Windows Task Scheduler I wrote an all-in-one Python script on my local machine to handle the entire refresh process.

# refresh_session.py

import os

import subprocess

from instaloader import Instaloader

from dotenv import load_dotenv

load_dotenv()

# ``` CONFIGURATION ```

INSTAGRAM_USERNAME = os.getenv("INSTAGRAM_USERNAME")

INSTAGRAM_PASSWORD = os.getenv("INSTAGRAM_PASSWORD")

# ... other variables for PEM path, EC2 host, etc.

def generate_new_session():

# ... logs in and saves session file ...

def upload_session_to_ec2():

# ... runs the 'scp' command to upload the file ...

def restart_service_on_ec2():

# ... runs the 'ssh' command to restart the systemd service ...

if __name__ == "__main__":

# ... calls the three functions in order ...I then used the built-in Windows Task Scheduler to run this script automatically in the background every few hours.

Now, my local machine acts as a trusted agent. It periodically generates a fresh, valid session token and pushes it to the server, restarting the app to ensure it's always ready.

Conclusion

Deploying a real-world ML application is a journey filled with hurdles that tutorials often skim over. From platform limitations and security triggers to understanding production-grade process management and networking, every step was a learning experience. By tackling each problem systematically—from the Instagram login to the final automation—I was able to build a robust, stable, and cost-effective deployment for my portfolio project.